Maha Elbayad

AI Research Scientist at FAIR, Meta

I am a senior research scientist at Meta AI based in Menlo Park, CA. I specialize in massively multilingual and multimodal machine translation models for speech and text, working on projects like No-Language-Left-Behind and SeamlessM4T. I completed my PhD in 2020 from Université Grenoble Alpes, where I worked at Laboratoire d’informatique de Grenoble and Inria under the supervision of Jakob Verbeek and Laurent Besacier. My doctoral thesis explored novel designs of sequence-to-sequence models for efficient offline and streaming machine translation.

Prior to my PhD, I graduated from Centrale Paris (Applied Mathematics) and ENS Paris-Saclay (M2 MVA Mathématiques, Vision, Apprentisage).

News

| Jan 15, 2025 | SeamlessM4T published in Nature. |

|---|---|

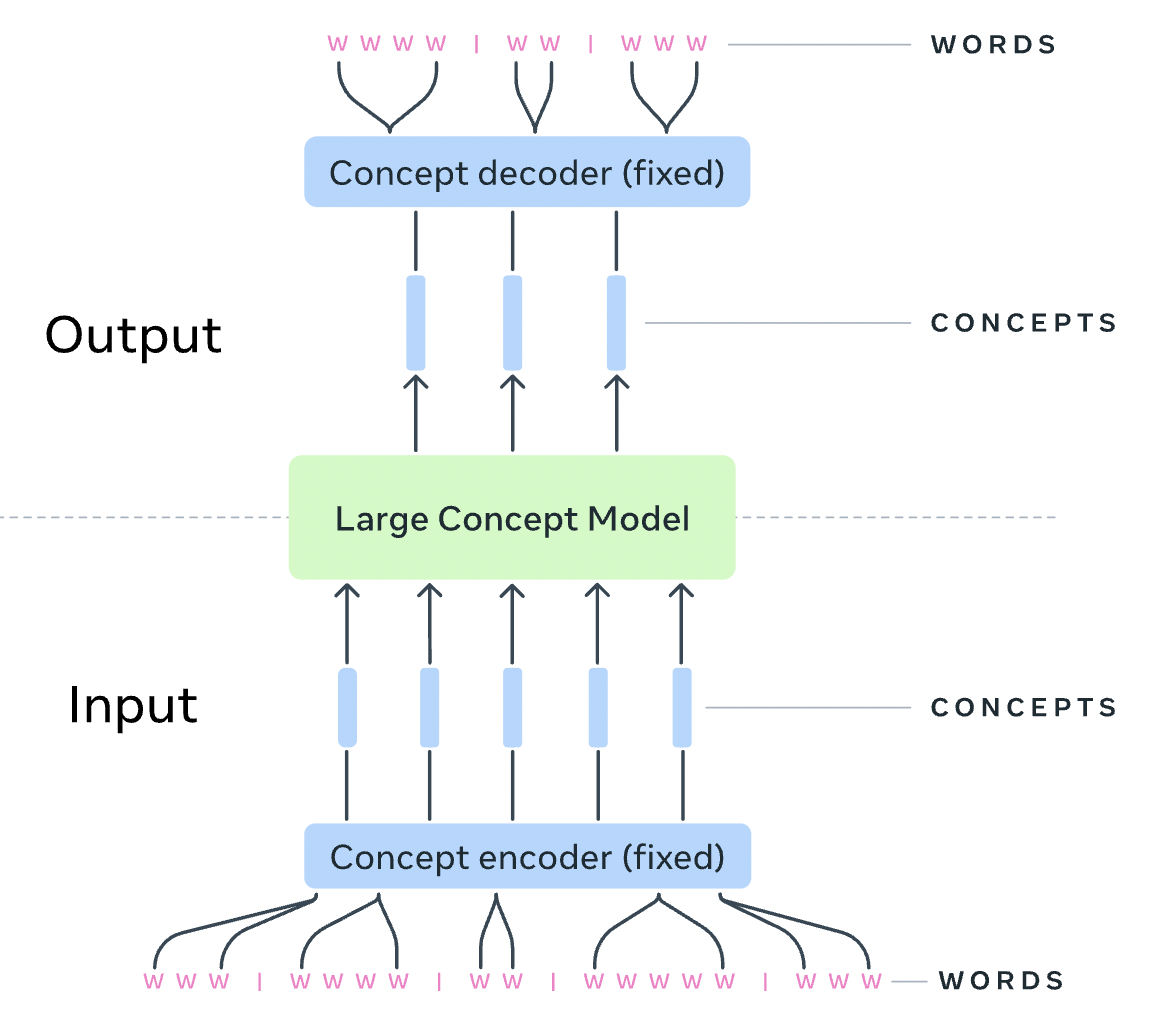

| Dec 10, 2024 | Publishing Large Concept Models (LCMs). This is a new direction in language modeling that moves beyond traditional token-level LLMs. |

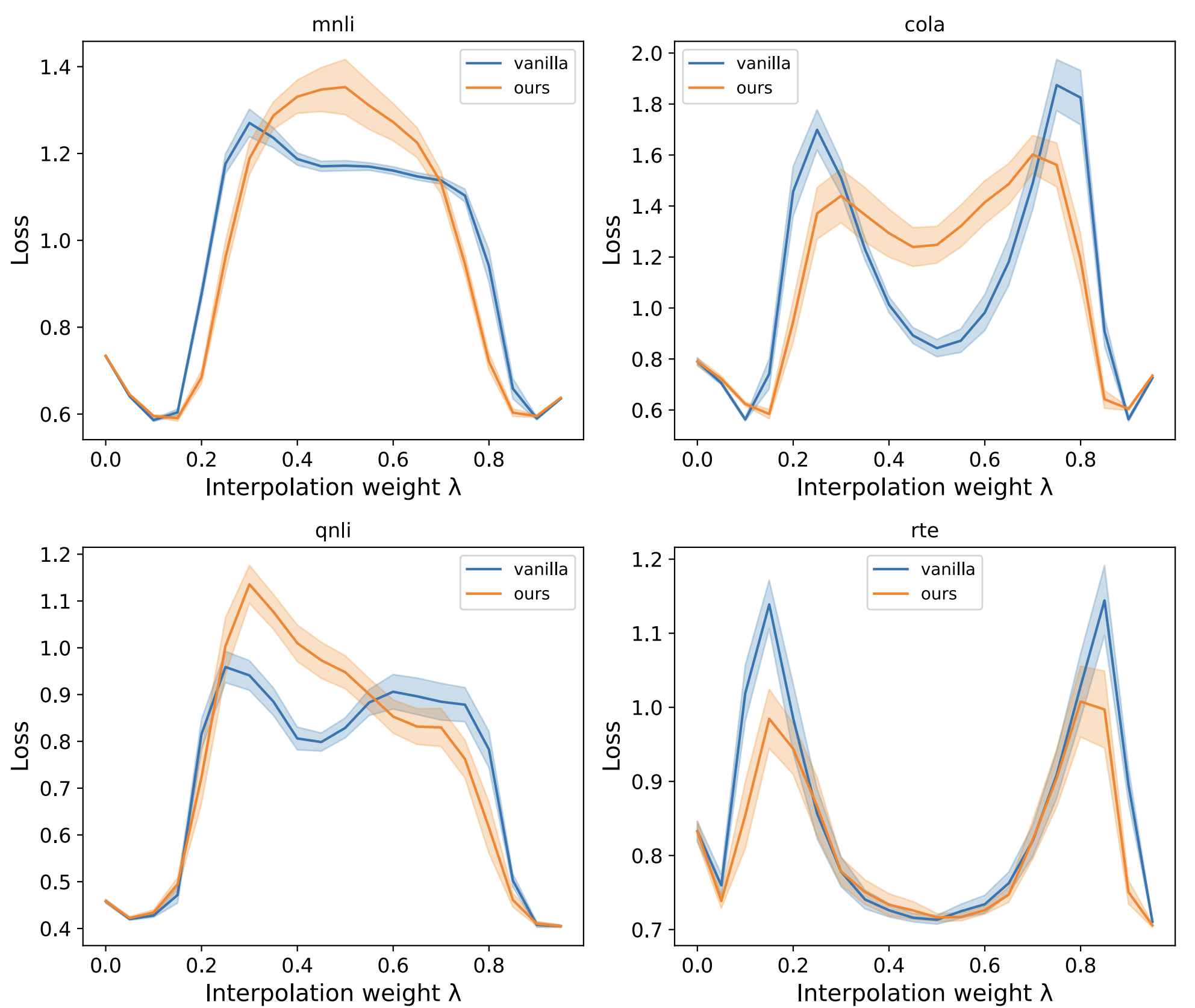

| Nov 07, 2024 | Merging Text Transformer Models from Different Initializations accepted by TMLR. |

| Jun 04, 2024 | No Language Left Behind published in Nature. |

| Nov 30, 2023 | Releasing Seamless communication models, a family of AI research models that enable more natural and authentic communication across languages (SeamlessExpressive, SeamlessStreaming and SeamlessM4T v2). Download the models from github or 🤗 |

| Oct 24, 2023 | TIME magazine selected SeamlessM4T as one of the best inventions of 2023! |