@article{elbayad2022fixing,

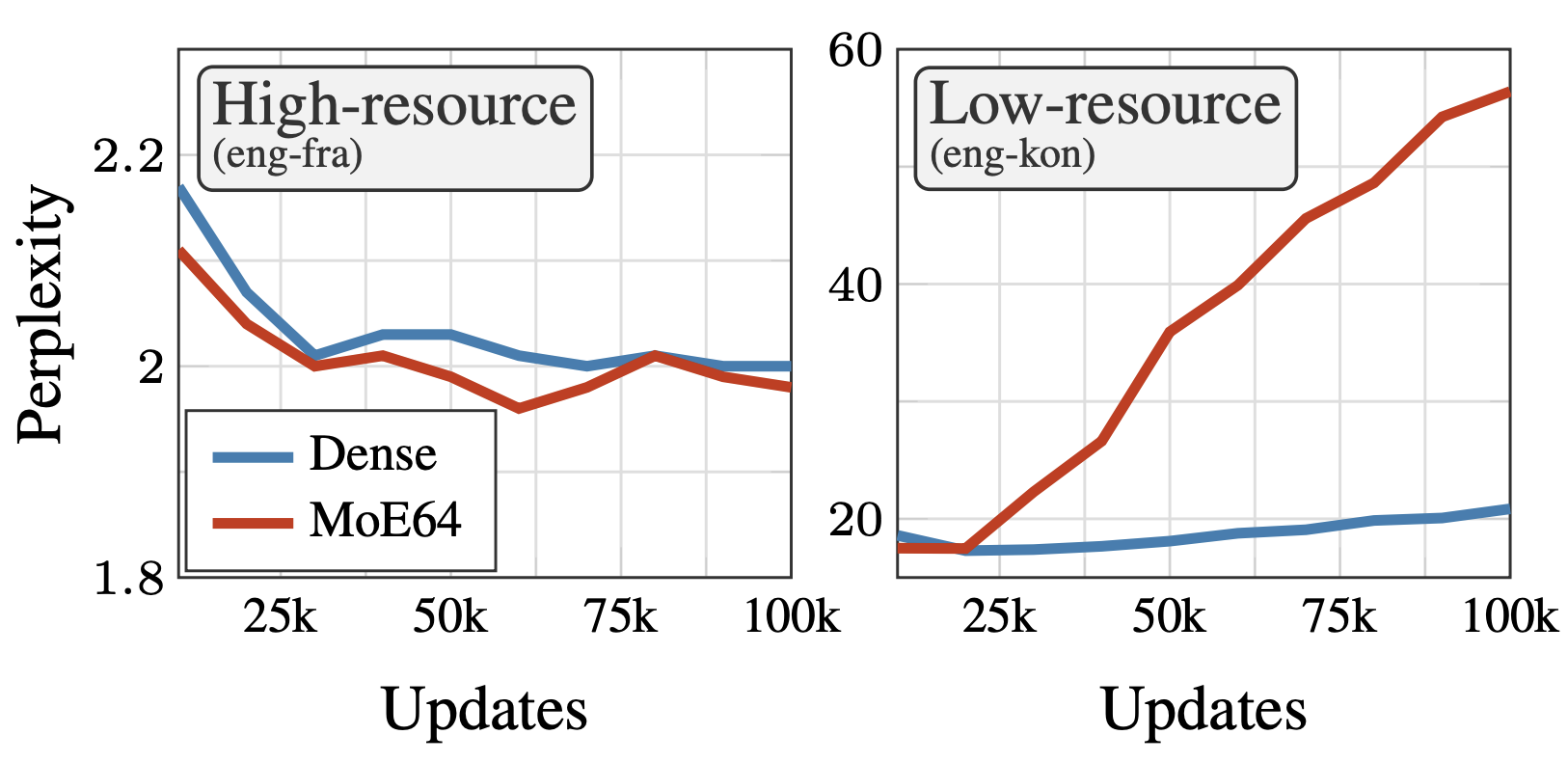

title={Fixing MoE Over-Fitting on Low-Resource Languages in Multilingual Machine Translation},

author={Elbayad, Maha and Sun, Anna and Bhosale, Shruti},

journal={arXiv preprint arXiv:2212.07571},

year={2022}

}

I am a senior research scientist at Meta AI based in Menlo Park, CA. I specialize in massively multilingual and multimodal machine translation models for speech and text, working on projects like No-Language-Left-Behind and SeamlessM4T. I completed my PhD in 2020 from Université Grenoble Alpes, where I worked at Laboratoire d'informatique de Grenoble and Inria under the supervision of Jakob Verbeek and Laurent Besacier.

My doctoral thesis explored novel designs of sequence-to-sequence models for efficient offline and streaming machine translation.

Prior to my PhD, I graduated from Centrale Paris (Applied Mathematics) and ENS Paris-Saclay (M2 MVA Mathématiques, Vision, Apprentisage).

What's new?

- 2023-05-07 Jury member and keynote speaker at Think AI Hackathon at UM6P, Morocco. Check the participants' work here.

- 2022-07-06 We released No Language Left Behind, open-source models delivering high-quality translations in 200 languages.

- 2022-03-01 I started a new position as AI Research Scientist at Meta (Facebook) AI in Menlo Park.

- 2021-10-08 I won the 2020 EAMT Best Thesis Award! (ex aequo with Mattia Antonino Di Gangi).

Selected publications

Fixing MoE Over-Fitting on Low-Resource Languages in Multilingual Machine Translation

Maha Elbayad, Anna Sun, Shruti Bhosale

ArXiv, 2022

ArXiv Code Bibtex

Maha Elbayad, Anna Sun, Shruti Bhosale

ArXiv, 2022

ArXiv Code Bibtex

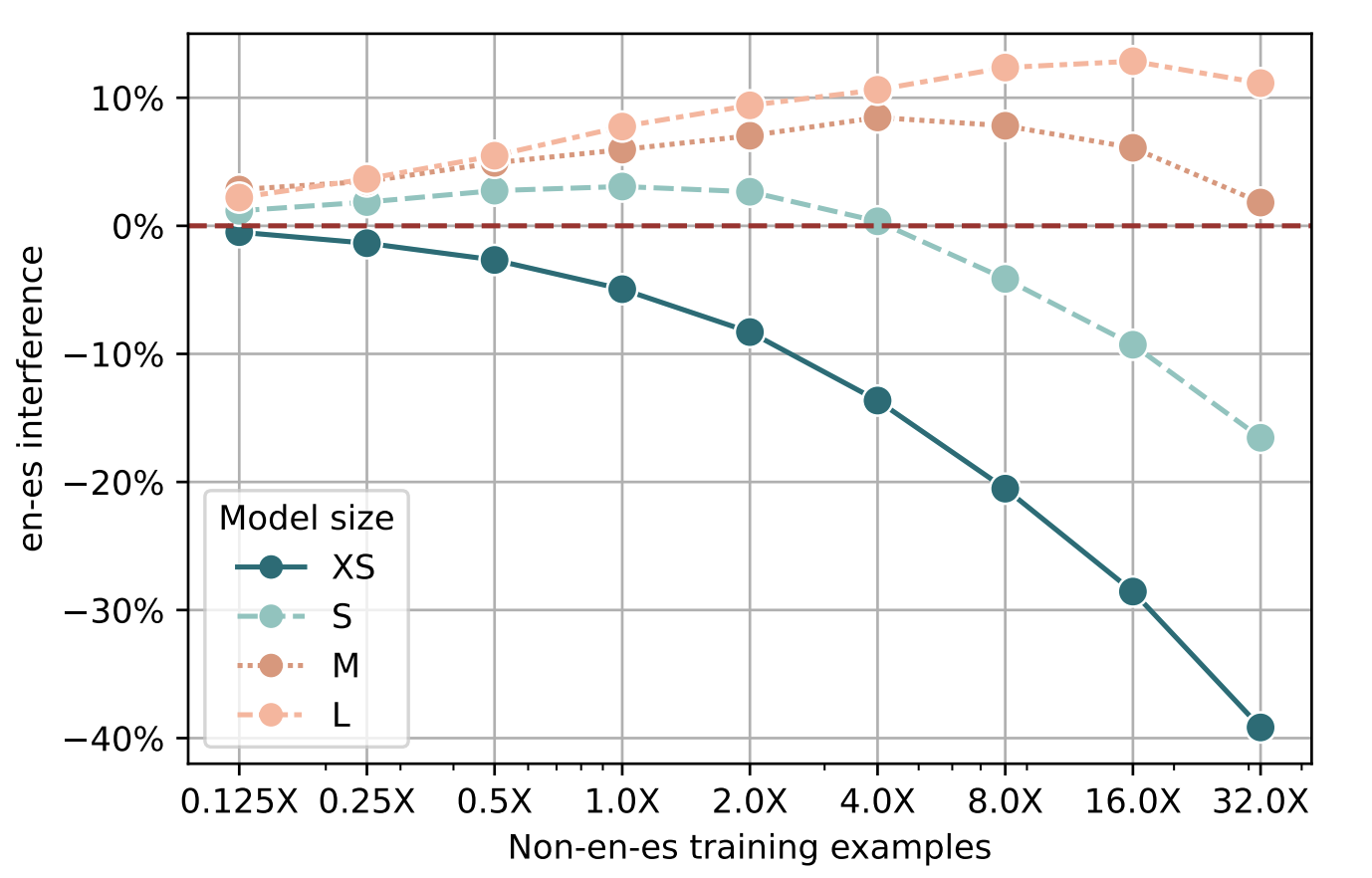

Causes and Cures for Interference in Multilingual Translation

Uri Shaham, Maha Elbayad, Vedanuj Goswami, Omer Levy, Shruti Bhosale

ArXiv, 2022

ArXiv Bibtex

Uri Shaham, Maha Elbayad, Vedanuj Goswami, Omer Levy, Shruti Bhosale

ArXiv, 2022

ArXiv Bibtex

@article{shaham2022causes,

title={Causes and Cures for Interference in Multilingual Translation},

author={Shaham, Uri and Elbayad, Maha and Goswami, Vedanuj and Levy, Omer and Bhosale, Shruti},

journal={arXiv preprint arXiv:2212.07530},

year={2022}

}

No language left behind: Scaling human-centered machine translation

NLLB Team

ArXiv, 2022

ArXiv Video Code Bibtex

NLLB Team

ArXiv, 2022

ArXiv Video Code Bibtex

@article{nllb2022,

title={No Language Left Behind: Scaling Human-Centered Machine Translation},

author={{NLLB Team} and Costa-jussà, Marta R. and Cross, James and Çelebi, Onur and Elbayad, Maha and Heafield, Kenneth and Heffernan, Kevin and Kalbassi, Elahe and Lam, Janice and Licht, Daniel and Maillard, Jean and Sun, Anna and Wang, Skyler and Wenzek, Guillaume and Youngblood, Al and Akula, Bapi and Barrault, Loic and Mejia-Gonzalez, Gabriel and Hansanti, Prangthip and Hoffman, John and Jarrett, Semarley and Sadagopan, Kaushik Ram and Rowe, Dirk and Spruit, Shannon and Tran, Chau and Andrews, Pierre and Ayan, Necip Fazil and Bhosale, Shruti and Edunov, Sergey and Fan, Angela and Gao, Cynthia and Goswami, Vedanuj and Guzmán, Francisco and Koehn, Philipp and Mourachko, Alexandre and Ropers, Christophe and Saleem, Safiyyah and Schwenk, Holger and Wang, Jeff},

year={2022},

journal={arXiv preprint arXiv:2207.04672}

}

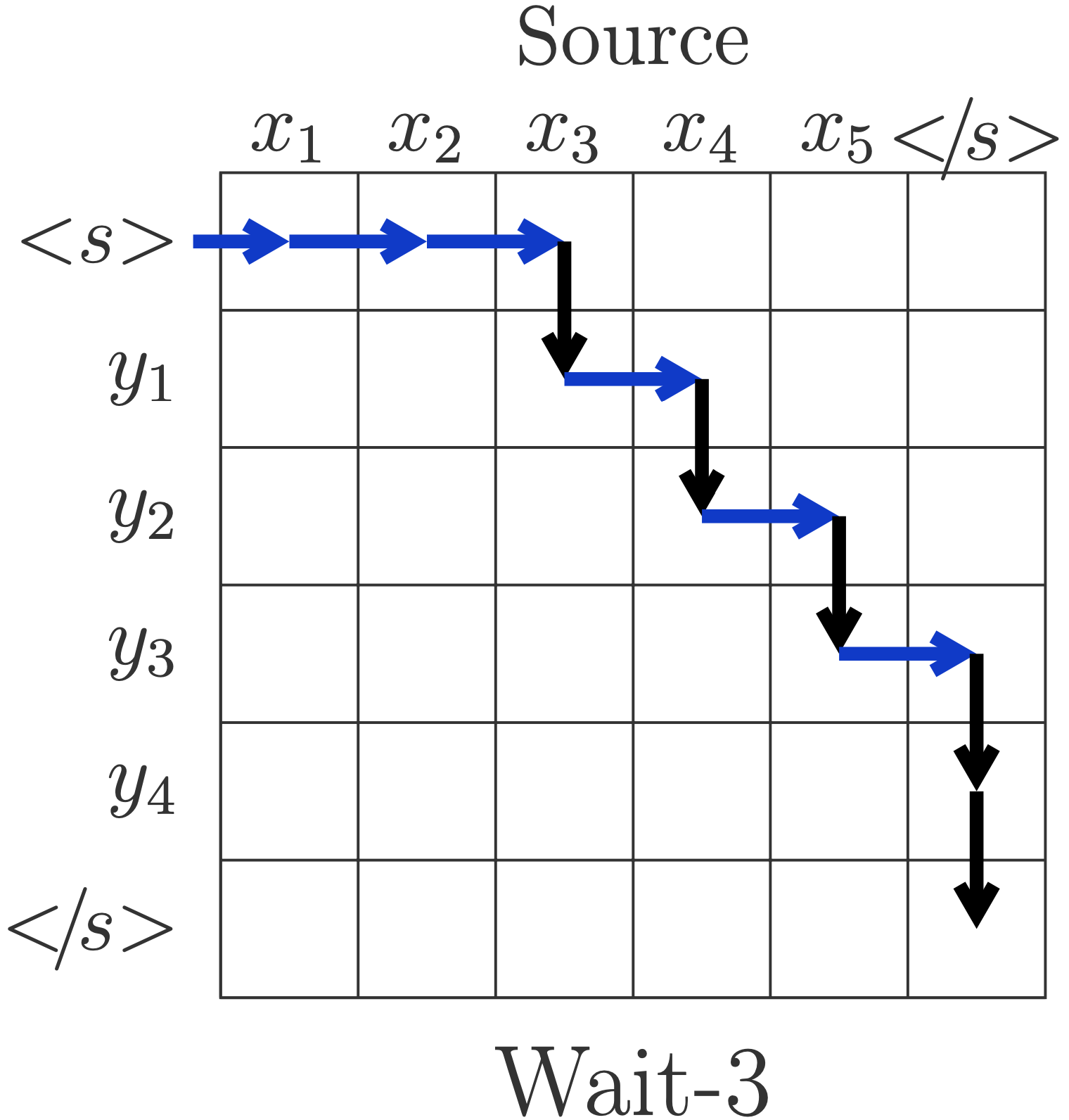

Efficient Wait-k Models for Simultaneous Machine Translation

Maha Elbayad, Laurent Besacier, Jakob Verbeek

INTERSPEECH, 2020

ArXiv In proceedings Slides Video Code Bibtex

Maha Elbayad, Laurent Besacier, Jakob Verbeek

INTERSPEECH, 2020

ArXiv In proceedings Slides Video Code Bibtex

@inproceedings{elbayad20waitk,

title={Efficient Wait-k Models for Simultaneous Machine Translation},

author={Elbayad, Maha and Besacier, Laurent and Verbeek, Jakob},

booktitle ={INTERSPEECH},

year={2020}

}

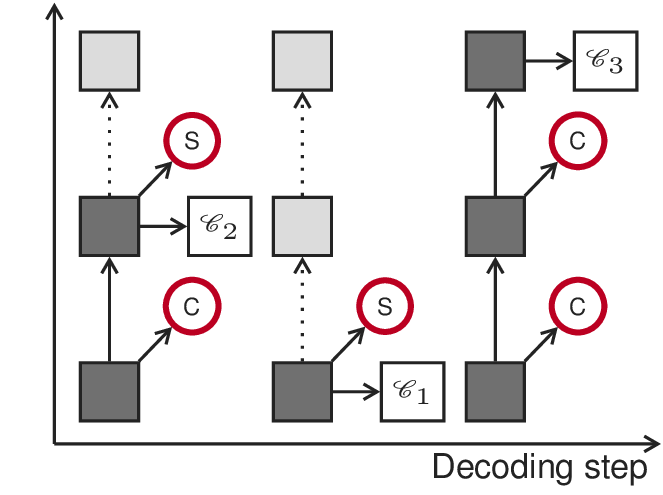

Depth-Adaptive Transformer

Maha Elbayad*, Jiatao Gu, Edouard Grave, Michael Auli

* Work done while interning at Facebook AI

Eighth International Conference on Learning Representations (ICLR), 2020

ArXiv In proceedings Video Bibtex

Maha Elbayad*, Jiatao Gu, Edouard Grave, Michael Auli

* Work done while interning at Facebook AI

Eighth International Conference on Learning Representations (ICLR), 2020

ArXiv In proceedings Video Bibtex

@InProceedings{elbayad19arxiv,

author ="Elbayad, Maha and Gu, Jiatao and Grave, Edouard and Auli, Michael",

title = "Depth-Adaptive Transformer",

booktitle = "In Proc. of ICLR",

year = "2020",

}

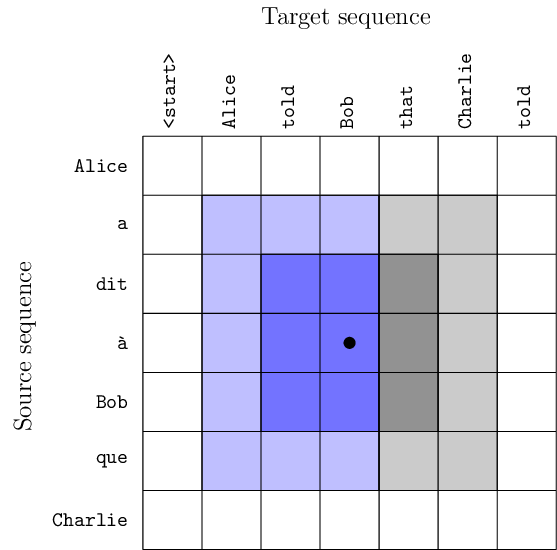

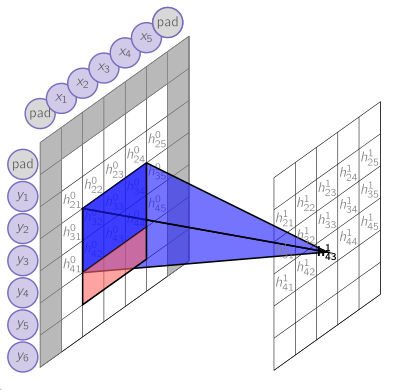

Pervasive Attention - 2D Convolutional Neural Networks for Sequence-to-Sequence Prediction

Maha Elbayad, Laurent Besacier, Jakob Verbeek

The SIGNLL Conference on Computational Natural Language Learning (CoNLL), 2018

ArXiv In proceedings Poster Code Bibtex

Maha Elbayad, Laurent Besacier, Jakob Verbeek

The SIGNLL Conference on Computational Natural Language Learning (CoNLL), 2018

ArXiv In proceedings Poster Code Bibtex

@InProceedings{elbayad18conll,

author ="Elbayad, Maha and Besacier, Laurent and Verbeek, Jakob",

title = "Pervasive Attention: 2D Convolutional Neural Networks for Sequence-to-Sequence Prediction",

booktitle = "Proceedings of the 22nd Conference on Computational Natural Language Learning",

year = "2018",

}

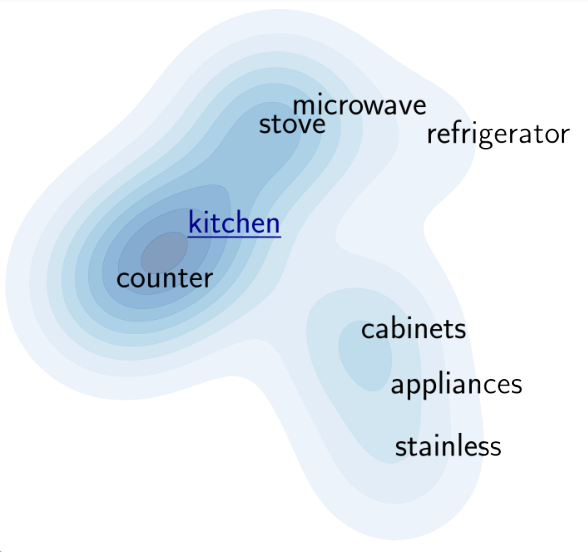

Token-level and sequence-level loss smoothing for RNN language models

Maha Elbayad, Laurent Besacier, Jakob Verbeek

Annual Meeting of the Association for Computational Linguistics (ACL), 2018

ArXiv In proceedings Slides Video Code Bibtex

Maha Elbayad, Laurent Besacier, Jakob Verbeek

Annual Meeting of the Association for Computational Linguistics (ACL), 2018

ArXiv In proceedings Slides Video Code Bibtex

@InProceedings{elbayad18acl,

author = "ELBAYAD, Maha and Besacier, Laurent and Verbeek, Jakob",

title = "Token-level and sequence-level loss smoothing for RNN language models",

booktitle = "Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers)",

year = "2018",

}

Talks

No language left behind: Scaling human-centered machine translation

8th December 2022 at Helsinki NLP

Slides Video

No language left behind: Scaling human-centered machine translation

6th July 2022 at Morocco AI

Slides Video

Pervasive Attention - 2D Convolutional Neural Networks for Sequence-to-Sequence Prediction

29th September 2018 at Naver Labs Europe, Grenoble, France

Slides

Ph.D Thesis

Rethinking the Design of Sequence-to-Sequence Models for Efficient Machine Translation

Defended on June 22nd, 2020

Manuscript Slides